In this part i will concentrate on the hardware and what i needed to do to get it to work.

Ok so i will go with a 19" server for this build. As my company uses Dell servers i'm pretty familiar with those and so i'll go searching for Dell hardware on eBay.

I need space for at least 8 disks so it will be something in the 2HU range.

What i found while searching is that you get used Dell R710 cheap and those have 8 2.5" bays so that fits my needs pretty good. They as well have 4 PCIe slots which can hold adapters for M2 SSDs which is pretty nice. You have to keep in mind though that these servers came out roughly 10 years ago so all these parts run on older generations of hardware. Don't expect a M2 PCIe SSD to perform at peak performance in a server that old. It will work nicely and fast but 1GB/s write speed will not be what you get.

Anyway i managed to snatch a R710 with 96GB of ECC RAM and 2 Xeon X5675 @ 3.06GHz for ~350€ and that is some really nice hardware for that price!

Most of those i found on ebay come with a Perc 6/i controller which is ... crap. It provides raid but no JBOD, it only supports up to 2TB disks and it's a 3GB/s controller so you really want to get rid of that thing.

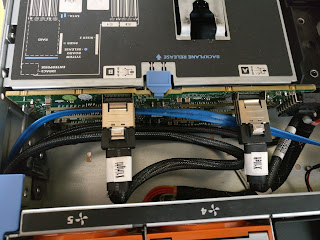

As i build a ZFS host i do not need any raid capabilities and i really want JBOD mode so i need a different storage controller for sure. I have picked a Dell H200 which is compatible with the R710 and can be cross flashed to actually become a plain LSI 9211 8i controller. I did get one of these for ~40€ on eBay as well. The H200 controller needs different cables than the old perc 6i so you need to get these too. I bought LINDY 33498 2x 4 Mini SAS Kabel SFF8087 at Amazon which were the cheapest i found at ~15€. Works perfectly fine even though they are a few centimeters too long but that's no real problem.

My R710 did not come with drive cages but you get those cheap at eBay as well.

As described in part one my target configuration contains these disks:

- 4 * 4T rotating HDD

- 2 * 120G SSD for ZIL

- 2 * 120G SSD for l2ARC

I have 8 2.5" bays in the R710 so that would fit but for l2ARC i decided to go with M2 adapter cards for PCIe and put some NVMe SSDs in. I thought about using NVMe for ZIL as well but decided against it as they only help on synchronous write and a normal SSD is fast as well so no need to spend the extra money there.

For obvious reasons i did not buy used disks but brand new ones. These are the largest part of the cost for this build:

2 * SANDISK Plus 120GB for ZIL

1 * SANDISK Plus 120GB for OS

2 * SAMSUNG SM961 NVMe SSD for l2ARC

You see one SSD for the OS here and i want to explain why. It is possible to boot from ZFS but it needs some effort and while it as well provides some nice options i did not want to do it for this build. If the OS disk dies i have to do da a fresh setup of the OS but that's it. All the data is in the ZFS pool and has redundancy, just re-import the pool and you are back online. That's good enough for me and makes life easier in general. I did not want to waste a HDD slot for this disk though so i bought an adapter that replaces the CD drive and holds the SSD instead. There are a bunch of those available on eBay or Amazon, just make sure to get one with 12.7mm height.

You see one SSD for the OS here and i want to explain why. It is possible to boot from ZFS but it needs some effort and while it as well provides some nice options i did not want to do it for this build. If the OS disk dies i have to do da a fresh setup of the OS but that's it. All the data is in the ZFS pool and has redundancy, just re-import the pool and you are back online. That's good enough for me and makes life easier in general. I did not want to waste a HDD slot for this disk though so i bought an adapter that replaces the CD drive and holds the SSD instead. There are a bunch of those available on eBay or Amazon, just make sure to get one with 12.7mm height.

There is one more important thing in this setup and that's the PSU of the R710! There is a 570W and a 870W option. If you run as many disks as i do you have to go with the 870W as the smaller one will complain that it can't fulfill the energy budget and the CPUs will be regulated down to lowest clock speed. IMHO 570W should be enough for that easily but the Dell firmware counts drives and even though an SSD does not need a lot of power 9 drives will be too much for the 570W PSU. You need the bigger one and not a second one! The 2 PSU slots are there for redundancy only!

As this is a used server and i want it to be as silent as possible i have renewed the thermal paste on the CPUs as well. It's pretty easy to do in a server like that as you have a lot more space to work and the cpu coolers don't use screws but clips. Just pull them off, clean the old paste with alcohol and apply some new decent thermal paste like Arctic Silver or Noctua NT-H1 which i had laying around.

Flashing the H200 is actually more tricky than i expected but if you know what you need to take care about it's easy to do. The process is described pretty good here but you need a second PC to do it. For whatever reason the megarec.exe does fail if you try to flash the card in the R710 - it just runs for ages and does not do what it should do. So if you have an old PC somewhere put the card in there to flash. If the board does not have the correct PCIe slot you can put the card into the PCIe x16 slot for the graphics card. PCIe uses the lanes it finds so a smaller card fits into the larger port without problems.

I had some issues with the bootable USB stick as you need DOS for the megarec command. The flash utility is available for linux but you need megarec to clean the bios image so you need FreeDos or something like that. I used FreeDos 1.2 full image and dd'ed that on the USB stick. Then i cleaned some packages in there which i don't need anyway to make space and then added the firmware images and tools need. I have bundled what i used to flash here but obviously i do not take any responsibility that it will work or if anything breaks. All i say is i used those files.

If you run into problems during flashing the card might no longer be responsive to any call and might look like it's bricked. It's not, just do a reboot and restart. With all the troubles i had flashing it in the R710 i ran into all kinds of odd messages but in the end a reboot and flash of the correct image always cleaned it up.

I did not flash the bios part on purpose as i do not boot from those disks anyway and i don't need raid so all i need is a controller that makes the disks available to the OS. According to the linked post this guy managed to flash it in a way that the internal storage port accepted the controller. I did not manage to do that, i get an invalid card message and boot is halted if i try it in the internal storage port. As i only need 2 of the PCIe slots though it was ok for me to have the H200 in there and did not bother to try other flash procedures. After all booting the R710 with 96G memory takes quite some time...

So after all that flashing fun, took me some hours to complete, i had what i needed. A controller able to address >2TB disks in JBOD mode. Just for completenes this is what i see in the OS at that point:

So flashing was successful and i have all disks available to the OS - ready to build the ZFS pool and do some benchmarking.

I have built a pool already and did some tests to verify if the setup works and it does but i did not yet go into detailed analysis as i'm currently waiting for the 870W PSU to arrive - yes i know about the issue with the PSUs described above first hand :) I thought i might be fine with mostly SSDs and thought i'll just give it a try but unfortunately you really need the stronger PSU for a setup like that. So i have built the pool and ran tests with the server in alarm mode and reduced down to minimal power so any tests i did so far can't really be taken serious. Still some first insights show the expected results. Writes directly to the rotating disks are rather slow as those disks are slow but as soon as ZIL or ARC come into play things get a lot better. My usual workload will be mainly fed directly from memory so read latency will be pretty low and write speeds should be fine as well as i have ZIL SSDs for synchronous writes and on async I/O it does not matter anyway.

My priority now though is what will be the 3 rd part of this series: Housing a 19" server in a silencer box in tower format. Ok the tower will be larger than the default tower case but hey who cares if it looks good and gets that thing down to a more acceptable noise level. At this point i can't be sure on the result as i'm still waiting for parts to arrive and for some spare time to actually build it but i hope for the best. The goal is to get a noise level comparable to my PC which is not exactly silent but silent enough. If i can manage that with the server it will get the spot of my old silent one next to my computer table, if not i have to find some other spot which is not that easy in my flat. I have a storage room on the other side of the wall my tables faces but as i live on the top floor that room gets pretty warm in summer so that's not my first choice. Let's see how my silencer tower works out in the next part of this series :)

Part 2: ZFS based NAS and home lab [build] (this page)

Keine Kommentare:

Kommentar veröffentlichen